Analysis within the subject of machine studying and AI, now a key expertise in virtually each business and firm, is much too voluminous for anybody to learn all of it. This column goals to gather among the most related current discoveries and papers — notably in, however not restricted to, synthetic intelligence — and clarify why they matter.

This week AI purposes have been present in a number of surprising niches on account of its skill to kind by massive quantities of information, or alternatively make smart predictions primarily based on restricted proof.

We’ve seen machine studying fashions taking over large knowledge units in biotech and finance, however researchers at ETH Zurich and LMU Munich are making use of comparable strategies to the data generated by international development aid projects resembling catastrophe aid and housing. The group educated its mannequin on hundreds of thousands of initiatives (amounting to $2.8 trillion in funding) from the final 20 years, an unlimited dataset that’s too advanced to be manually analyzed intimately.

“You may consider the method as an try to learn a whole library and type comparable books into topic-particular cabinets. Our algorithm takes under consideration 200 totally different dimensions to find out how comparable these 3.2 million initiatives are to one another – an not possible workload for a human being,” mentioned examine writer Malte Toetzke.

Very top-level traits recommend that spending on inclusion and variety has elevated, whereas local weather spending has, surprisingly, decreased in the previous couple of years. You can examine the dataset and trends they analyzed here.

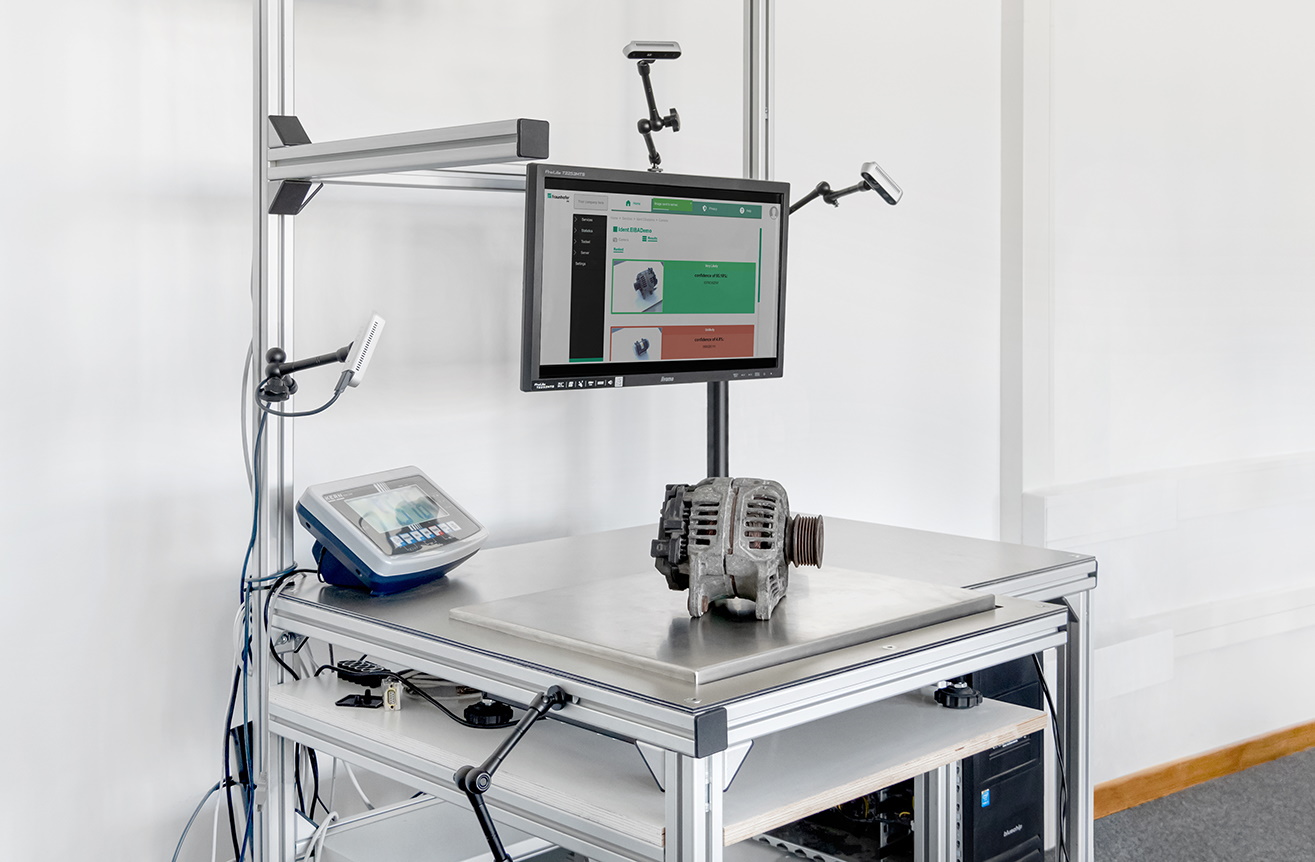

One other space few individuals take into consideration is the big variety of machine elements and elements which might be produced by varied industries at an unlimited clip. Some may be reused, some recycled, others should be disposed of responsibly — however there are too many for human specialists to undergo. German R&D outfit Fraunhofer has developed a machine learning model for identifying parts to allow them to be put to make use of as an alternative of heading to the scrap yard.

Picture Credit: Fraunhofer

The system depends on greater than strange digicam views, since elements might look comparable however be very totally different, or be an identical mechanically however differ visually on account of rust or put on. So every half can be weighed and scanned by 3D cameras, and metadata like origin can be included. The mannequin then suggests what it thinks the half is so the human inspecting it doesn’t have to begin from scratch. It’s hoped that tens of 1000’s of elements will quickly be saved, and the processing of hundreds of thousands accelerated, by utilizing this AI-assisted identification methodology.

Physicists have discovered an fascinating option to carry ML’s qualities to bear on a centuries-old drawback. Basically researchers are all the time in search of methods to indicate that the equations that govern fluid dynamics (a few of which, like Euler’s, date to the 18th century) are incomplete — that they break at sure excessive values. Utilizing conventional computational strategies that is troublesome to do, although not not possible. However researchers at CIT and Dangle Seng College in Hong Kong suggest a brand new deep studying methodology to isolate seemingly cases of fluid dynamics singularities, whereas others are making use of the method in different methods to the sphere. This Quanta article explains this interesting development quite well.

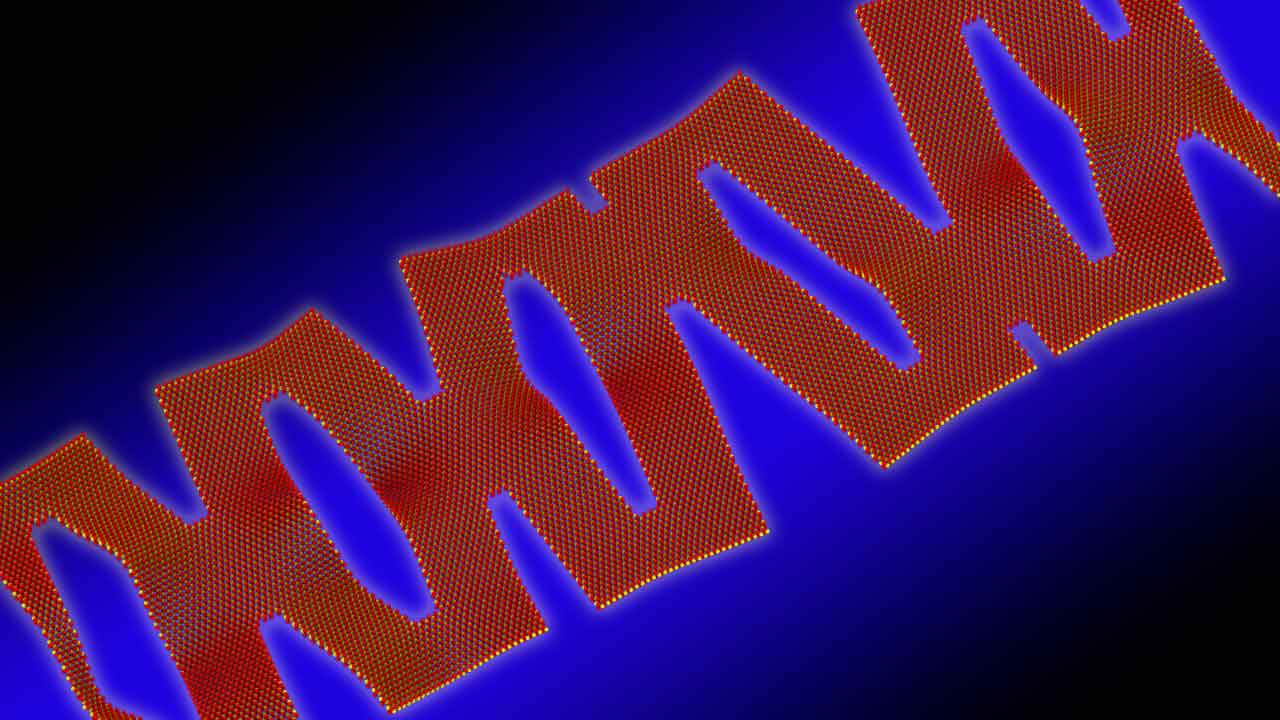

One other centuries-old idea getting an ML layer is kirigami, the artwork of paper-cutting that many might be aware of within the context of making paper snowflakes. The method goes again centuries in Japan and China specifically, and may produce remarkably advanced and versatile buildings. Researchers at Argonne Nationwide Labs took inspiration from the idea to theorize a 2D material that may retain electronics at microscopic scale but additionally flex simply.

The group had been doing tens of 1000’s of experiments with 1-6 cuts manually, and used that knowledge to coach the mannequin. They then used a Division of Vitality supercomputer to carry out simulations all the way down to the molecular stage. In seconds it produced a 10-cut variation with 40 % stretchability, far past what the group had anticipated and even tried on their very own.

Picture Credit: Argonne Nationwide Labs

“It has discovered issues we by no means instructed it to determine. It realized one thing the best way a human learns and used its information to do one thing totally different,” mentioned undertaking lead Pankaj Rajak. The success has spurred them to extend the complexity and scope of the simulation.

One other fascinating extrapolation achieved by a specifically educated AI has a pc imaginative and prescient mannequin reconstructing colour knowledge from infrared inputs. Usually a digicam capturing IR wouldn’t know something about what colour an object was within the seen spectrum. However this experiment discovered correlations between sure IR bands and visual ones, and created a model to transform photos of human faces captured in IR into ones that approximate the seen spectrum.

It’s nonetheless only a proof of idea, however such spectrum flexibility might be a great tool in science and images.

—

In the meantime, a brand new examine coauthored by Google AI lead Jeff Dean pushes again towards the notion that AI is an environmentally expensive endeavor, owing to its excessive compute necessities. Whereas some analysis has discovered that coaching a big mannequin like OpenAI’s GPT-3 can generate carbon dioxide emissions equivalent to that of a small neighborhood, the Google-affiliated examine contends that “following finest practices” can scale back machine studying carbon emissions as much as 1000x.

The practices in query concern the forms of fashions used, the machines used to coach fashions, “mechanization” (e.g., computing within the cloud versus on native computer systems) and “map” (selecting knowledge middle places with the cleanest power). In response to the coauthors, choosing “environment friendly” fashions alone can scale back computation by components of 5 to 10, whereas utilizing processors optimized for machine studying coaching, resembling GPUs, can enhance the performance-per-Watt ratio by components of two to five.

Any thread of analysis suggesting that AI’s environmental influence may be lessened is trigger for celebration, certainly. Nevertheless it should be identified that Google isn’t a neutral party. Lots of the firm’s merchandise, from Google Maps to Google Search, depend on fashions that required massive quantities of power to develop and run.

Mike Prepare dinner, a member of the Knives and Paintbrushes open analysis group, factors out that — even when the examine’s estimates are correct — there merely isn’t a great motive for an organization to not scale up in an energy-inefficient manner if it advantages them. Whereas educational teams would possibly take note of metrics like carbon influence, firms aren’t as incentivized in the identical manner — no less than at the moment.

“The entire motive we’re having this dialog to start with is that firms like Google and OpenAI had successfully infinite funding, and selected to leverage it to construct fashions like GPT-3 and BERT at any value, as a result of they knew it gave them a bonus,” Prepare dinner instructed Avisionews through e-mail. “General, I believe the paper says some good stuff and it’s nice if we’re excited about effectivity, however the situation isn’t a technical one for my part — we all know for a undeniable fact that these firms will go large when they should, they gained’t restrain themselves, so saying that is now solved without end simply looks like an empty line.”

The final subject for this week isn’t truly about machine studying precisely, however somewhat what could be a manner ahead in simulating the mind in a extra direct manner. EPFL bioinformatics researchers created a mathematical mannequin for creating tons of distinctive however correct simulated neurons that would finally be used to construct digital twins of neuroanatomy.

“The findings are already enabling Blue Mind to construct biologically detailed reconstructions and simulations of the mouse mind, by computationally reconstructing mind areas for simulations which replicate the anatomical properties of neuronal morphologies and embody area particular anatomy,” mentioned researcher Lida Kanari.

Don’t anticipate sim-brains to make for higher AIs — that is very a lot in pursuit of advances in neuroscience — however maybe the insights from simulated neuronal networks might result in elementary enhancements to the understanding of the processes AI seeks to mimic digitally.