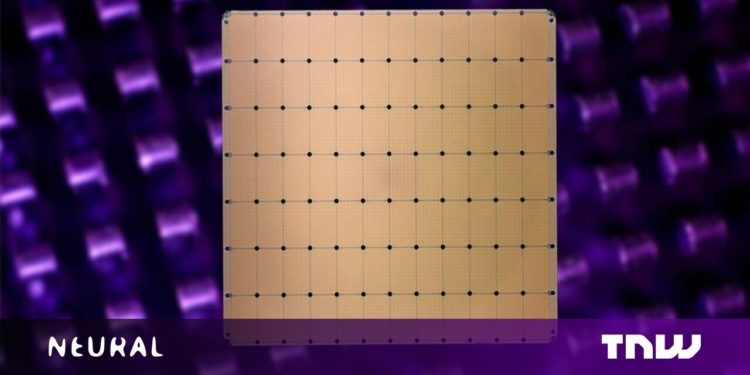

Larger isn’t at all times higher, however typically it’s. Cerebras Techniques, an organization bent on accelerating machine studying techniques, constructed the world’s largest chip final yr. Within the time since, it’s developed bespoke options to a few of the largest issues within the AI trade.

Based in 2015, Cerebras is a type of reunion tour for many of its C-suite executives. Previous to constructing chips the scale of dinner plates, the workforce was accountable for Sea Micro, an organization based in 2007 that finally bought to AMD for greater than $330 million in 2012.

Cerebras Techniques is now valued at more than $4 billion.

I interviewed Cerebras Techniques co-founder and CEO Andrew Feldman to learn how his firm was bellying as much as the {hardware} bar in 2022 — an period marked by large tech’s domination of the AI trade.

Feldman appeared unaffected by the prospect of competitors from Silicon Valley’s elite.

In line with him, Cerebras was constructed from the bottom as much as tackle all comers:

From day one we needed to be within the Laptop Historical past Museum. We needed to be within the corridor of fame.

That may sound like hubris to the outsider, however the dog-eat-dog world (or extra aptly, big-dog-buys-little-dog-and-absorbs-its-technology world) of pc {hardware} is not any place for the meek.

To ensure that Cerebras to make its mark, Feldman and his workforce relied on their confirmed observe file of success and ultra-high ambitions to safe entry into the market.

Per Feldman:

We’re system builders, which implies we’re unafraid of adverse issues… should you’re 100-times higher than (large tech’s greatest efforts) then there’s nothing they’ll do.

So the large query is, are Cererbras’ humongous chips that significantly better than the competitors’s?

The reply: there actually isn’t any competitors. Its chips are greater than 50X bigger than the largest GPU in the marketplace (the form of chip usually used to coach machine studying techniques).

In line with Cerebras’ web site, the corporate’s CS-2 system, constructed on its WSE-2 “wafer” chips, can substitute a whole rack of processors usually used to coach AI fashions:

A single CS-2 usually delivers the wall-clock compute efficiency of many tens to a whole bunch of graphics processing items (GPU), or extra. In a single system lower than one rack in measurement, the CS-2 delivers solutions in minutes or hours that will take days, weeks, or longer on massive multi-rack clusters of legacy, common goal processors.

Primarily based on the benchmarks the corporate’s printed, the CS-2 not solely provides efficiency benefits, but it surely additionally has the potential to massively scale back the quantity of energy consumed throughout AI coaching periods. It is a win for companies making an attempt to unravel extraordinarily onerous issues and for the planet.

To be taught extra about Cerebras Techniques, try the white paper for the CS-2 here and the corporate web site here.