This text is a part of Demystifying AI, a collection of posts that (attempt to) disambiguate the jargon and myths surrounding AI. (In partnership with Paperspace)

Lately, the transformer mannequin has develop into one of many foremost highlights of advances in deep learning and deep neural networks. It’s primarily used for superior purposes in pure language processing. Google is utilizing it to reinforce its search engine outcomes. OpenAI has used transformers to create its well-known GPT-2 and GPT-3 fashions.

Since its debut in 2017, the transformer structure has advanced and branched out into many alternative variants, increasing past language duties into different areas. They’ve been used for time collection forecasting. They’re the important thing innovation behind AlphaFold, DeepMind’s protein construction prediction mannequin. Codex, OpenAI’s supply code–era mannequin, is predicated on transformers. Extra just lately, transformers have discovered their method into laptop imaginative and prescient, the place they’re slowly changing convolutional neural networks (CNN) in lots of sophisticated duties.

Researchers are nonetheless exploring methods to enhance transformers and use them in new purposes. Here’s a temporary explainer about what makes transformers thrilling and the way they work.

Processing sequences with neural networks

The basic feed-forward neural network just isn’t designed to maintain monitor of sequential information and maps every enter into an output. This works for duties similar to classifying pictures however fails on sequential information similar to textual content. A machine studying mannequin that processes textual content should not solely compute each phrase but in addition consider how phrases are available in sequences and relate to one another. The that means of phrases can change relying on different phrases that come earlier than and after them within the sentence.

Earlier than transformers, recurrent neural networks (RNN) had been the go-to answer for pure language processing. When supplied with a sequence of phrases, an RNN processes the primary phrase and feeds again the consequence into the layer that processes the subsequent phrase. This permits it to maintain monitor of the whole sentence as an alternative of processing every phrase individually.

Recurrent neural nets had disadvantages that restricted their usefulness. First, they had been very sluggish. Since they needed to course of information sequentially, they may not benefit from parallel computing {hardware} and graphics processing items (GPU) in coaching and inference. Second, they may not deal with lengthy sequences of textual content. Because the RNN received deeper right into a textual content excerpt, the consequences of the primary phrases of the sentence progressively light. This downside, often called “vanishing gradients,” was problematic when two linked phrases had been very far aside within the textual content. And third, they solely captured the relations between a phrase and the phrases that got here earlier than it. In actuality, the that means of phrases relies on the phrases that come each earlier than and after them.

Lengthy short-term reminiscence (LSTM) networks, the successor to RNNs, had been in a position to resolve the vanishing gradients downside to some extent and had been in a position to deal with bigger sequences of textual content. However LSTMs had been even slower to coach than RNNs and nonetheless couldn’t take full benefit of parallel computing. They nonetheless relied on the serial processing of textual content sequences.

Transformers, launched within the 2017 paper “Attention Is All You Need,” made two key contributions. First, they made it potential to course of complete sequences in parallel, making it potential to scale the pace and capability of sequential deep studying fashions to unprecedented charges. And second, they launched “consideration mechanisms” that made it potential to trace the relations between phrases throughout very lengthy textual content sequences in each ahead and reverse instructions.

Processing sequences with neural networks

Earlier than we talk about how the transformer mannequin works, it’s value discussing the kinds of issues that sequential neural networks resolve.

A “vector to sequence” mannequin takes a single enter, similar to a picture, and produces a sequence of knowledge, similar to an outline.

A “sequence to vector” mannequin takes a sequence as enter, similar to a product assessment or a social media submit, and outputs a single worth, similar to a sentiment rating.

A “sequence to sequence” mannequin takes a sequence as enter, similar to an English sentence, and outputs one other sequence, such because the French translation of the sentence.

Regardless of their variations, all a majority of these fashions have one factor in widespread. They be taught representations. The job of a neural community is to rework one kind of knowledge into one other. Throughout coaching, the hidden layers of the neural community (the layers that stand between the enter and output) tune their parameters in a method that greatest represents the options of the enter information kind and maps it to the output.

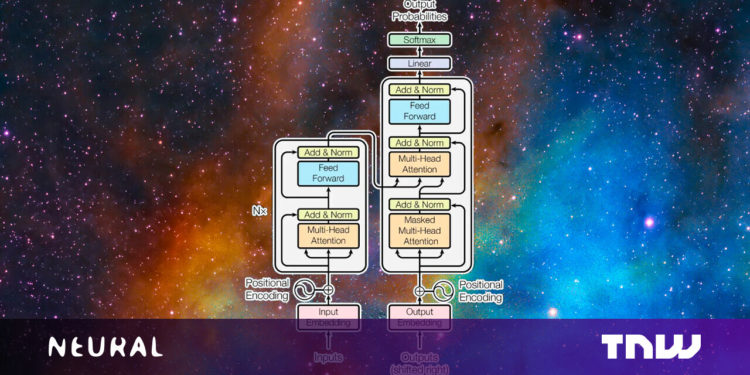

The unique transformer was designed as a sequence-to-sequence (seq2seq) mannequin for machine translation (after all, seq2seq fashions are usually not restricted to translation duties). It’s composed of an encoder module that compresses an enter string from the supply language right into a vector that represents the phrases and their relations to one another. The decoder module transforms the encoded vector right into a string of textual content within the vacation spot language.

Tokens and embeddings

The enter textual content should be processed and reworked right into a unified format earlier than being fed to the transformer. First, the textual content goes by way of a “tokenizer,” which breaks it down into chunks of characters that may be processed individually. The tokenization algorithm can rely upon the appliance. Usually, each phrase and punctuation mark roughly counts as one token. Some suffixes and prefixes depend as separate tokens (e.g., “ize,” “ly,” and “pre”). The tokenizer produces an inventory of numbers that signify the token IDs of the enter textual content.

The tokens are then transformed into “phrase embeddings.” A phrase embedding is a vector that tries to seize the worth of phrases in a multi-dimensional house. For instance, the phrases “cat” and “canine” can have comparable values throughout some dimensions as a result of they’re each utilized in sentences which are about animals and home pets. Nonetheless, “cat” is nearer to “lion” than “wolf” throughout another dimension that separates felines from canids. Equally, “Paris” and “London” could be shut to one another as a result of they’re each cities. Nonetheless, “London” is nearer to “England” and “Paris” to “France” on a dimension that separates nations. Phrase embeddings often have a whole bunch of dimensions.

Phrase embeddings are created by embedding fashions, that are skilled individually from the transformer. There are a number of pre-trained embedding fashions which are used for language duties.

Consideration layers

As soon as the sentence is reworked into an inventory of phrase embeddings, it’s fed into the transformer’s encoder module. In contrast to RNN and LSTM fashions, the transformer doesn’t obtain one enter at a time. It might obtain a whole sentence’s value of embedding values and course of them in parallel. This makes transformers extra compute-efficient than their predecessors and likewise allows them to look at the context of the textual content in each ahead and backward sequences.

To protect the sequential nature of the phrases within the sentence, the transformer applies “positional encoding,” which principally implies that it modifies the values of every embedding vector to signify its location within the textual content.

Subsequent, the enter is handed to the primary encoder block, which processes it by way of an “consideration layer.” The eye layer tries to seize the relations between the phrases within the sentence. For instance, contemplate the sentence “The large black cat crossed the highway after it dropped a bottle on its facet.” Right here, the mannequin should affiliate “it” with “cat” and “its” with “bottle.” Accordingly, it ought to set up different associations similar to “massive” and “cat” or “crossed” and “cat.” In any other case put, the eye layer receives an inventory of phrase embeddings that signify the values of particular person phrases and produces an inventory of vectors that signify each particular person phrases and their relations to one another. The eye layer incorporates a number of “consideration heads,” every of which might seize completely different sorts of relations between phrases.

The output of the eye layer is fed to a feed-forward neural community that transforms it right into a vector illustration and sends it to the subsequent consideration layer. Transformers comprise a number of blocks of consideration and feed-forward layers to progressively seize extra sophisticated relationships.

The duty of the decoder module is to translate the encoder’s consideration vector into the output information (e.g., the translated model of the enter textual content). Through the coaching part, the decoder has entry each to the eye vector produced by the encoder and the anticipated final result (e.g., the translated string).

The decoder makes use of the identical tokenization, phrase embedding, and a spotlight mechanism to course of the anticipated final result and create consideration vectors. It then passes this consideration vector and the eye layer within the encoder module, which establishes relations between the enter and output values. Within the translation utility, that is the half the place the phrases from the supply and vacation spot languages are mapped to one another. Just like the encoder module, the decoder consideration vector is handed by way of a feed-forward layer. Its result’s then mapped to a really giant vector which is the scale of the goal information (within the case of language translation, this could span throughout tens of 1000’s of phrases).

Coaching the transformer

Throughout coaching, the transformer is supplied with a really giant corpus of paired examples (e.g., English sentences and their corresponding French translations). The encoder module receives and processes the total enter string. The decoder, nonetheless, receives a masked model of the output string, one phrase at a time, and tries to ascertain the mappings between the encoded consideration vector and the anticipated final result. The encoder tries to foretell the subsequent phrase and makes corrections based mostly on the distinction between its output and the anticipated final result. This suggestions allows the transformer to switch the parameters of the encoder and decoder and progressively create the best mappings between the enter and output languages.

The extra coaching information and parameters the transformer has, the extra capability it positive aspects to keep up coherence and consistency throughout lengthy sequences of textual content.

Variations of the transformer

Within the machine translation instance that we examined above, the encoder module of the transformer discovered the relations between English phrases and sentences, and the decoder learns the mappings between English and French.

However not all transformer purposes require each the encoder and decoder module. For instance, the GPT household of enormous language fashions makes use of stacks of decoder modules to generate textual content. BERT, one other variation of the transformer mannequin developed by researchers at Google, solely makes use of encoder modules.

The benefit of a few of these architectures is that they are often skilled by way of self-supervised learning or unsupervised methods. BERT, for instance, does a lot of its coaching by taking giant corpora of unlabeled textual content, masking components of it, and making an attempt to foretell the lacking components. It then tunes its parameters based mostly on how a lot its predictions had been near or removed from the precise information. By repeatedly going by way of this course of, BERT captures the statistical relations between completely different phrases in several contexts. After this pretraining part, BERT will be finetuned for a downstream activity similar to query answering, textual content summarization, or sentiment evaluation by coaching it on a small variety of labeled examples.

Utilizing unsupervised and self-supervised pretraining reduces the guide effort required to annotate coaching information.

Much more will be mentioned about transformers and the brand new purposes they’re unlocking, which is out of the scope of this text. Researchers are nonetheless discovering methods to squeeze extra out of transformers.

Transformers have additionally created discussions about language understanding and artificial general intelligence. What is evident is that transformers, like different neural networks, are statistical fashions that seize regularities in information in intelligent and complex methods. They do not “understand” language in the way in which that people do. However they’re thrilling and helpful nonetheless and have rather a lot to supply.

This text was initially written by Ben Dickson and printed by Ben Dickson on TechTalks, a publication that examines traits in know-how, how they have an effect on the way in which we reside and do enterprise, and the issues they resolve. However we additionally talk about the evil facet of know-how, the darker implications of latest tech, and what we have to look out for. You may learn the unique article here.