Who will probably be liable for dangerous speech generated by massive language fashions? As superior AIs resembling OpenAI’s GPT-3 are being cheered for spectacular breakthroughs in pure language processing and technology — and all types of (productive) functions for the tech are envisaged from slicker copywriting to extra succesful customer support chatbots — the dangers of such highly effective text-generating instruments inadvertently automating abuse and spreading smears can’t be ignored. Nor can the danger of unhealthy actors deliberately weaponizing the tech to unfold chaos, scale hurt and watch the world burn.

Certainly, OpenAI is anxious sufficient concerning the dangers of its fashions going “completely off the rails,” as its documentation puts it at one level (in reference to a response instance by which an abusive buyer enter is met with a really troll-esque AI reply), to supply a free content material filter that “goals to detect generated textual content that may very well be delicate or unsafe coming from the API” — and to suggest that customers don’t return any generated textual content that the filter deems “unsafe.” (To be clear, its documentation defines “unsafe” to imply “the textual content incorporates profane language, prejudiced or hateful language, one thing that may very well be NSFW or textual content that portrays sure teams/individuals in a dangerous method.”).

However, given the novel nature of the expertise, there are not any clear authorized necessities that content material filters have to be utilized. So OpenAI is both appearing out of concern to keep away from its fashions inflicting generative harms to individuals — and/or reputational concern — as a result of if the expertise will get related to immediate toxicity that would derail improvement.

Simply recall Microsoft’s ill-fated Tay AI Twitter chatbot — which launched again in March 2016 to loads of fanfare, with the corporate’s analysis workforce calling it an experiment in “conversational understanding.” But it took less than a day to have its plug yanked by Microsoft after internet customers ‘taught’ the bot to spout racist, antisemitic and misogynistic hate tropes. So it ended up a special form of experiment: In how on-line tradition can conduct and amplify the worst impulses people can have.

The identical types of bottom-feeding web content material has been sucked into at the moment’s massive language fashions — as a result of AI mannequin builders have crawled all around the web to acquire the huge corpuses of free textual content they should practice and dial up their language producing capabilities. (For instance, per Wikipedia, 60% of the weighted pre-training dataset for OpenAI’s GPT-3 got here from a filtered model of Widespread Crawl — aka a free dataset comprised of scraped internet information.) Which implies these much more highly effective massive language fashions can, nonetheless, slip into sarcastic trolling and worse.

European policymakers are barely grappling with tips on how to regulate on-line harms in present contexts like algorithmically sorted social media platforms, the place a lot of the speech can at the least be traced again to a human — not to mention contemplating how AI-powered textual content technology may supercharge the issue of on-line toxicity whereas creating novel quandaries round legal responsibility.

And with out clear legal responsibility it’s prone to be tougher to stop AI programs from getting used to scale linguistic harms.

Take defamation. The legislation is already dealing with challenges with responding to mechanically generated content material that’s merely mistaken.

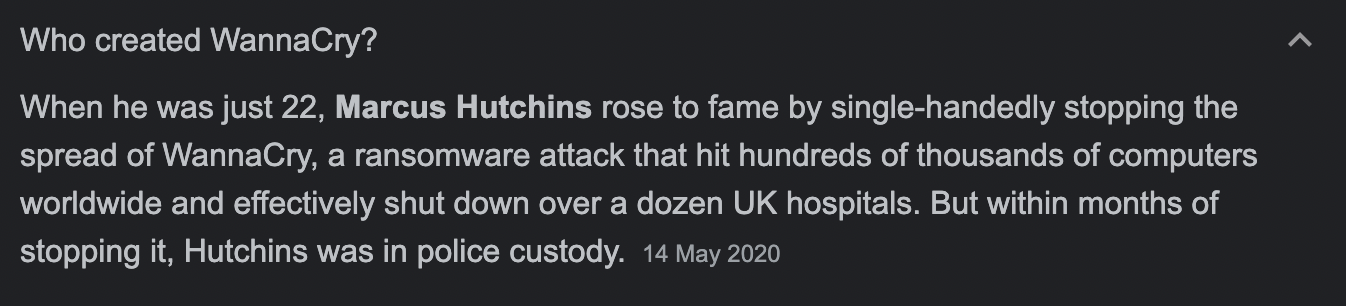

Safety analysis Marcus Hutchins took to TikTok just a few months again to point out his follows how he’s being “bullied by Google’s AI,” as he put it. In a remarkably chipper clip, contemplating he’s explaining a Kafka-esque nightmare by which one of many world’s most dear firms regularly publishes a defamatory suggestion about him, Hutchins explains that in the event you google his identify the search engine outcomes web page (SERP) it returns contains an mechanically generated Q&A — by which Google erroneously states that Hutchins made the WannaCry virus.

Hutchins is definitely well-known for stopping WannaCry. But Google’s AI has grasped the mistaken finish of the stick on this not-at-all-tricky to differentiate important distinction — and, seemingly, retains getting it mistaken. Repeatedly. (Presumably as a result of so many on-line articles cite Hutchins’ identify in the identical span of textual content as referencing ‘WannaCry’ — however that’s as a result of he’s the man who stopped the worldwide ransomeware assault from being even worse than it was. So that is some actual synthetic stupidity in motion by Google.)

To the purpose the place Hutchins says he’s all however given up making an attempt to get the corporate to cease defaming him by fixing its misfiring AI.

“The principle drawback that’s made this so laborious is whereas elevating sufficient noise on Twitter received a few the problems fastened, because the complete system is automated it simply provides extra later and it’s like enjoying whack-a-mole,” Hutchins instructed Avisionews. “It’s received to the purpose the place I can’t justify elevating the difficulty anymore as a result of I simply sound like a damaged report and other people get irritated.”

Within the months since we requested Google about this misguided SERP the Q&A it associates with Hutchins has shifted — so as a substitute of asking “What virus did Marcus Hutchins make?” — and surfacing a one phrase (incorrect) reply straight under: “WannaCry,” earlier than providing the (appropriate) context in an extended snippet of textual content sourced from a information article, because it was in April, a seek for Hutchins’ identify now ends in Google displaying the query “Who created WannaCry” (see screengrab under). But it surely now simply fails to reply its personal query — because the snippet of textual content it shows under solely talks about Hutchins stopping the unfold of the virus.

Picture Credit: Natasha Lomas/Avisionews (screengrab)

So Google has — we should assume — tweaked how the AI shows the Q&A format for this SERP. However in doing that it’s damaged the format (as a result of the query it poses isn’t answered).

Furthermore, the deceptive presentation which pairs the query “Who created WannaCry?” with a seek for Hutchins’ identify, may nonetheless lead an online consumer who shortly skims the textual content Google shows after the query to wrongly imagine he’s being named because the writer of the virus. So it’s not clear it’s a lot/any enchancment on what was being mechanically generated earlier than.

In earlier remarks to Avisionews, Hutchins additionally made the purpose that the context of the query itself, in addition to the way in which the consequence will get featured by Google, can create the deceptive impression he made the virus — including: “It’s unlikely somebody googling for say a faculty undertaking goes to learn the entire article once they really feel like the reply is correct there.”

He additionally connects Google’s mechanically generated textual content to direct, private hurt, telling us: “Ever since google began that includes these SERPs, I’ve gotten an enormous spike in hate feedback and even threats based mostly on me creating WannaCry. The timing of my authorized case gives the look that the FBI suspected me however a fast [Google search] would affirm that’s not the case. Now there’s all types of SERP outcomes which indicate I did, confirming the searcher’s suspicious and it’s brought about reasonably plenty of injury to me.”

Requested for a response to his grievance, Google despatched us this assertion attributed to a spokesperson:

The queries on this characteristic are generated mechanically and are supposed to spotlight different widespread associated searches. We now have programs in place to stop incorrect or unhelpful content material from showing on this characteristic. Typically, our programs work effectively, however they don’t have an ideal understanding of human language. Once we turn out to be conscious of content material in Search options that violates our policies, we take swift motion, as we did on this case.

The tech large didn’t reply to follow-up questions mentioning that its “motion” retains failing to deal with Hutchins’ grievance.

That is in fact only one instance — however it appears to be like instructive that a person, with a comparatively massive on-line presence and platform to amplify his complaints about Google’s ‘bullying AI,’ actually can’t cease the corporate from making use of automation expertise that retains surfacing and repeating defamatory ideas about him.

In his TikTok video, Hutchins suggests there’s no recourse for suing Google over the difficulty within the US — saying that’s “basically as a result of the AI is just not legally an individual nobody is legally liable; it could actually’t be thought-about libel or slander.”

Libel legislation varies relying on the nation the place you file a grievance. And it’s doable Hutchins would have a greater probability of getting a court-ordered repair for this SERP if he filed a grievance in sure European markets resembling Germany — the place Google has beforehand been sued for defamation over autocomplete search ideas (albeit the result of that authorized motion, by Bettina Wulff, is much less clear however it seems that the claimed false autocomplete ideas she had complained have been being linked to her identify by Google’s tech did get fastened) — reasonably than within the U.S., the place Part 230 of the Communications Decency Act offers normal immunity for platforms from legal responsibility for third-party content material.

Though, within the Hutchins SERP case, the query of whose content material that is, precisely, is one key consideration. Google would most likely argue its AI is simply reflecting what others have beforehand revealed — ergo, the Q&A ought to be wrapped in Part 230 immunity. But it surely is likely to be doable to (counter) argue that the AI’s choice and presentation of textual content quantities to a considerable remixing which implies that speech — or, at the least, context — is definitely being generated by Google. So ought to the tech large actually get pleasure from safety from legal responsibility for its AI-generated textual association?

For big language fashions, it’s going to absolutely get tougher for mannequin makers to dispute that their AIs are producing speech. However particular person complaints and lawsuits don’t appear to be a scalable repair for what may, doubtlessly, turn out to be massively scaled automated defamation (and abuse) — due to the elevated energy of those massive language fashions and increasing entry as APIs are opened up.

Regulators are going to want to grapple with this challenge — and with the place legal responsibility lies for communications which might be generated by AIs. Which implies grappling with the complexity of apportioning legal responsibility, given what number of entities could also be concerned in making use of and iterating massive language fashions, and shaping and distributing the outputs of those AI programs.

Within the European Union, regional lawmakers are forward of the regulatory curve as they’re at present working to hash out the small print of a risk-based framework the Fee proposed final yr to set guidelines for sure functions of synthetic intelligence to attempt to make sure that extremely scalable automation applied sciences are utilized in a manner that’s protected and non-discriminatory.

But it surely’s not clear that the EU’s AI Act — as drafted — would provide sufficient checks and balances on malicious and/or reckless functions of enormous language fashions as they’re classed as normal function AI programs that have been excluded from the unique Fee draft.

The Act itself units out a framework that defines a restricted set of “excessive danger” classes of AI software, resembling employment, legislation enforcement, biometric ID and so forth, the place suppliers have the very best degree of compliance necessities. However a downstream applier of a big language mannequin’s output — who’s doubtless counting on an API to pipe the potential into their explicit area use case — is unlikely to have the required entry (to coaching information, and so forth.) to have the ability to perceive the mannequin’s robustness or dangers it’d pose; or to make modifications to mitigate any issues they encounter, resembling by retraining the mannequin with completely different datasets.

Authorized specialists and civil society teams in Europe have raised issues over this carve out for normal function AIs. And over a newer partial compromise textual content that’s emerged throughout co-legislator discussions has proposed together with an article on normal function AI programs. However, writing in Euroactiv final month, two civil society teams warned the urged compromise would create a continued carve-out for the makers of normal function AIs — by placing all of the duty on deployers who make use of programs whose workings they’re not, by default, aware of.

“Many information governance necessities, significantly bias monitoring, detection and correction, require entry to the datasets on which AI programs are educated. These datasets, nevertheless, are within the possession of the builders and never of the consumer, who places the final function AI system ‘into service for an meant function.’ For customers of those programs, due to this fact, it merely won’t be doable to fulfil these information governance necessities,” they warned.

One authorized professional we spoke to about this, the web legislation tutorial Lilian Edwards — who has beforehand critiqued plenty of limitations of the EU framework — stated the proposals to introduce some necessities on suppliers of enormous, upstream general-purpose AI programs are a step ahead. However she urged enforcement appears to be like tough. And whereas she welcomed the proposal so as to add a requirement that suppliers of AI programs resembling massive language fashions should “cooperate with and supply the required data” to downstream deployers, per the newest compromise textual content, she identified that an exemption has additionally been urged for IP rights or confidential enterprise data/commerce secrets and techniques — which dangers fatally undermining your entire obligation.

So, TL;DR: Even Europe’s flagship framework for regulating functions of synthetic intelligence nonetheless has a approach to go to latch onto the slicing fringe of AI — which it should do if it’s to stay as much as the hype as a claimed blueprint for reliable, respectful, human-centric AI. In any other case a pipeline of tech-accelerated harms appears to be like all however inevitable — offering limitless gas for the net tradition wars (spam ranges of push-button trolling, abuse, hate speech, disinformation!) — and organising a bleak future the place focused people and teams are left firefighting a endless move of hate and lies. Which might be the other of honest.

The EU had made a lot of the pace of its digital lawmaking lately however the bloc’s legislators should assume exterior the field of current product guidelines on the subject of AI programs in the event that they’re to place significant guardrails on quickly evolving automation applied sciences and keep away from loopholes that permit main gamers maintain sidestepping their societal duties. Nobody ought to get a go for automating hurt — irrespective of the place within the chain a ‘black field’ studying system sits, nor how massive or small the consumer — else it’ll be us people left holding a darkish mirror.